The field of data science is vast, and there are many excellent machine learning tools to utilize. If, like myself, you’re fairly new to data science, all of the modeling options can seem overwhelming. But not to worry, the more projects you create, the more models you will use, and the more excited you will become to learn new skills. One of my favorite projects that I have done was an image recognition system to detect pneumonia in children’s chest X-rays. I won’t get into all of the details about that project in this post, but I will talk about the modeling process that I used: Neural Networks.

What are Neural Networks?

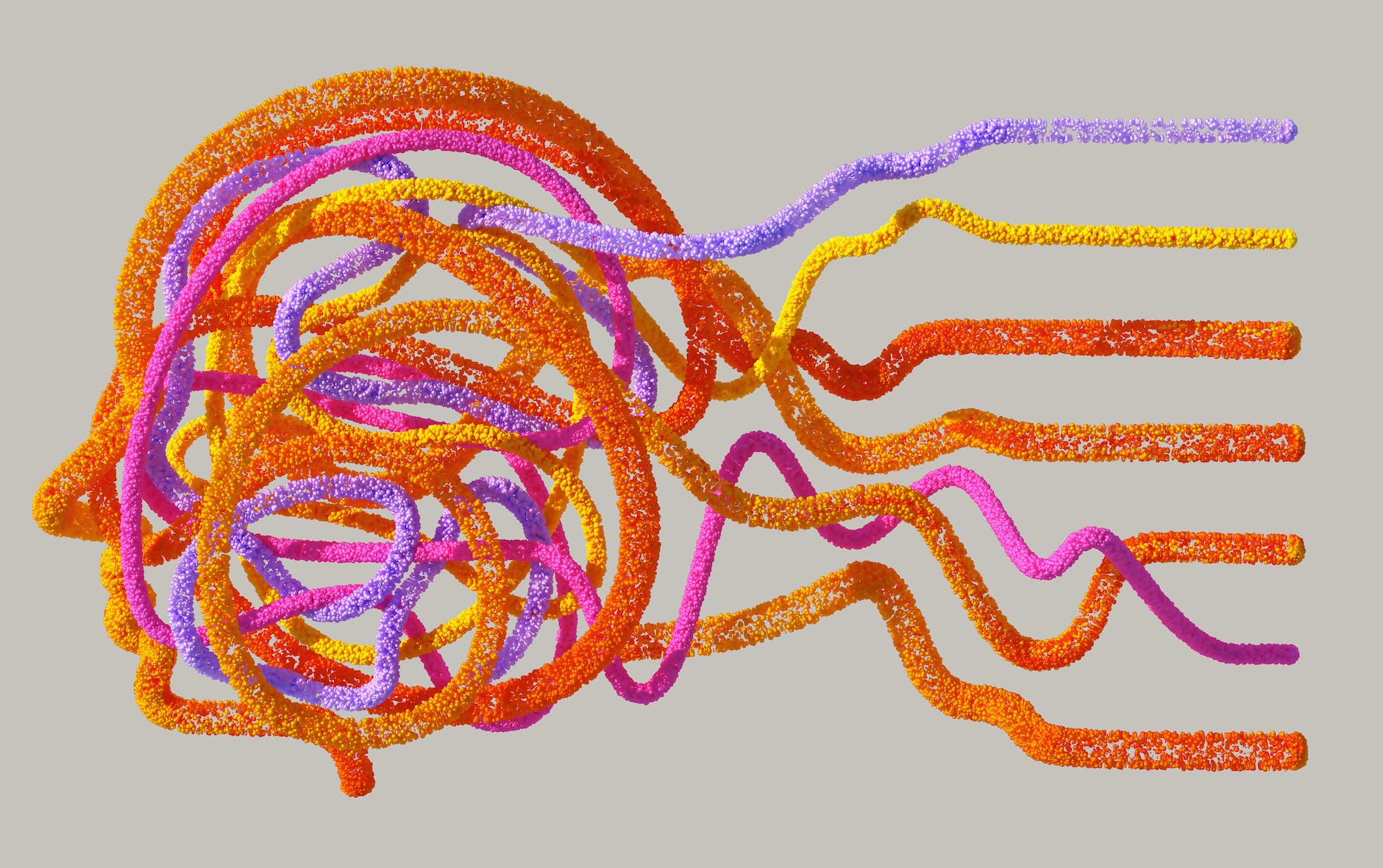

Inspired by the human brain, a neural network is a computational model composed of interconnected neurons, or nodes, organized into layers. Information enters the first layer, the input layer, and is then processed and passed through each additional layer, eventually concluding at an output layer. Because of the interconnected nature of the neurons and the complexity of layering, neural networks can be very powerful at learning and solving various problems

The Structure of a Neural Network

A typical neural network consists of three parts:

- Input Layer: The input layer is just like it sounds; it is the layer that takes in the information available. This can be an image, text, or any other form of data. In the project I mentioned above, I inputed images of children’s chest x-rays as well as labels stating whether or not each image contained pneumonia.

- Hidden Layers: The hidden layers are where all the magic happens. These are the layers that extract hidden patterns and latent features from the input data.

- Output Layer: The final layer returns an output that can be in the form of a classification, regression, or other prediction depending on the problem being solved. In my project, the output layer consisted of a single node that classified the image as showing pneumonia or not showing pneumonia.

How do Neural Networks Learn?

Neural networks excel due to their ability to learn. How does this happen? During the training process, neural networks process the labeled data set and then check predictions against the correct labels. The network then adjusts its internal parameters, called weights and biases, through a process known as backpropagation.

- Forward Pass: In this phase, data flows from the input layer through the hidden layers to the output layer, producing a prediction.

- Error Calculation: The prediction is compared to the actual output, and the error or loss is computed. This error is a measure of how far off the network’s prediction is from the truth.

- Backpropagation: The error signal is propagated backward through the network, adjusting the weights and biases to minimize the error. This iterative process continues until the network’s predictions become sufficiently accurate.

- Batch: Often, the dataset will be split into mini batches. Each batch will go through the forward pass, error calculation, and backpropagation. Each batch allows the model to to update weights and biases to reduce loss.

- Epoch: When all of the batches have gone through the above cycle (all of the data has been processed), one epoch has been completed. The number of epochs to be run is a hyperparameter that can be adjusted. In my project, I set this to be 100 but stipulated that if the loss on my validation data didn’t show any improvement after 10 epochs, the training would end and the best weights and biases (from the epoch that had the lowest loss) would be preserved. I never had a model that ran 100 epochs. When to stop early and the number of epochs will vary depending on your specific data. Too few epochs will result in underfitting, too many will result in overfitting.

Why Neural Networks are Useful

- Complex Pattern Recognition: Neural networks excel at recognizing complex patterns in data, making them invaluable for tasks such as image and speech recognition, as well as natural language processing.

- Non-Linearity: Unlike traditional linear models, neural networks can capture non-linear relationships in data, making them suitable for a wide range of real-world problems.

- Deep Learning: Deep neural networks, also known as deep learning models, with multiple hidden layers, have demonstrated exceptional performance in various fields, including computer vision, speech processing, reinforcement learning, and like my project, medical image classification.

- Scalability: Neural networks can be scaled up or down to handle datasets of varying sizes and complexities, making them adaptable to different data science applications.

- Automation: Once trained, neural networks can automate complex decision-making processes, reducing the need for manual intervention in data analysis tasks.

Neural networks are the driving force behind many breakthroughs in data science. Their ability to learn from data and make predictions has revolutionized industries and continues to push the boundaries of what’s possible. As a new data scientist, understanding neural networks and their applications will be an exciting and powerful skill in your toolkit.

Leave a comment